Pleno Inc.

Pleno recently came out of Stealth and announced a $15M round for their seemingly PCR-like but not PCR platform. There’s almost no information available on the platform but they state that it “is capable of detecting up to 10,000 targets per sample and processing up to 10,000 samples per day”.

The technical side is even vaguer, describing the technology as Hypercoding™ which using techniques developed for telecoms (perhaps I should call the Reticula approach Hypocoding?).

Aside from this, there’s not much information available. There don’t appear to be any published patents. But it’s always fun to engage in some (largely baseless) speculation.

Firstly, it seems reasonably clear that they are using an optical approach. They have open positions for a “Lead Optical Engineer” with experience in laser-based fluorescent detection systems and a “Software Development Lead” whose role includes “algorithms for simulation and analysis of large, image-based datasets”.

Lightfield?

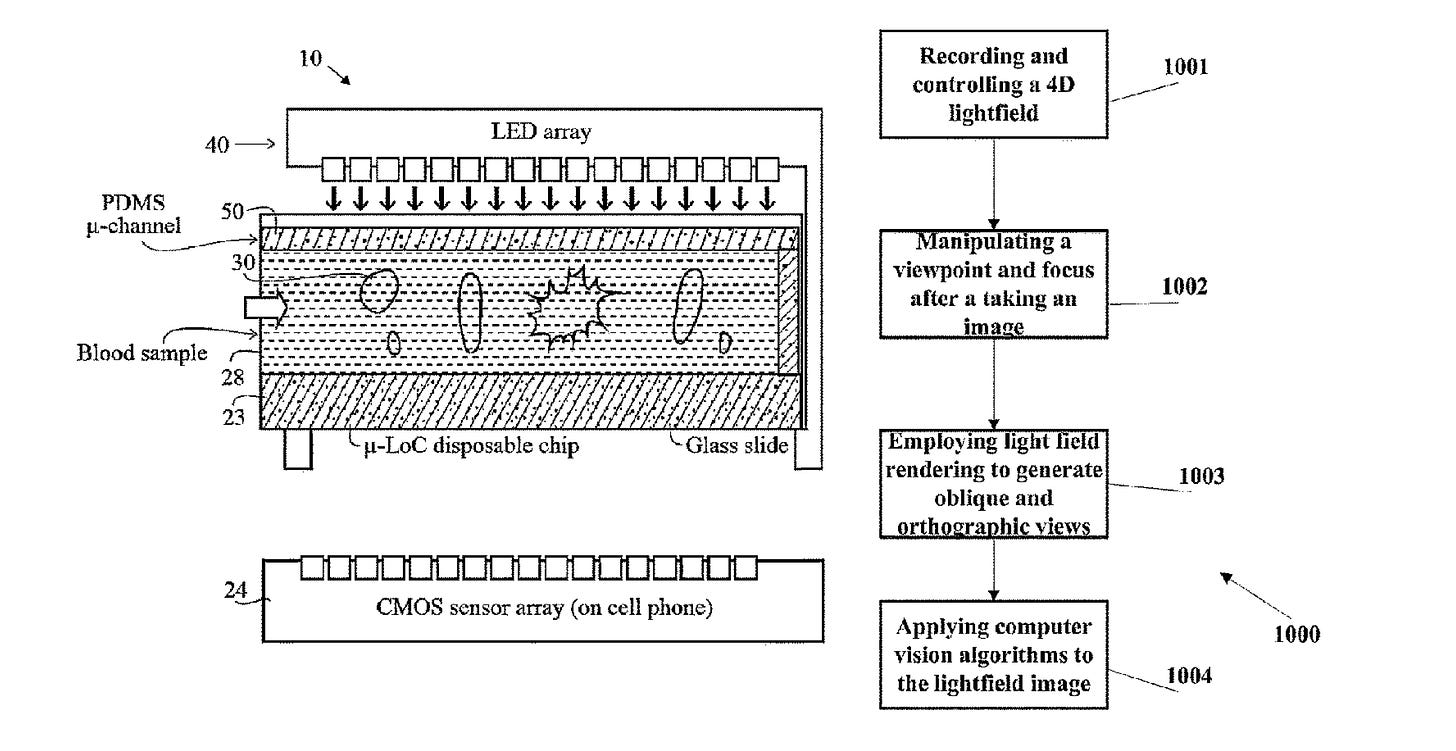

The name of the company is also interesting. Pleno suggests perhaps Plenoptical? Plenoptical (or lightfield) cameras were all the range a few years back. The idea is not to use traditional focusing optics at all, but rather capture the direction of the light ray as it enters the camera. In the context of camera this lets you focus after acquisition, and perform other neat tricks.

Pleno CEO Pieter Van Rooyen, has one patent covering applying lightfield imaging to microscopy (blood sample) applications. But it’s from way back in 2010 and doesn’t seem particularly relevant, so this is probably a bit of a stretch…

10000 Samples-a-day

10,000 samples a day is curious wording. If you could process individual samples quickly I’d imagine you’d say 10 seconds per sample or similar. This suggests to me that the platform probably processes 384 well plates or similar (and such plates are shown on their site). With each plate taking ~1 hour.

This would also make it easier to detect 10,000 targets, particularly if you need to sequentially apply different excitation wavelengths etc.

Baseless Speculation

So, I suspect they’ve using a neat imaging approach to look at multiple (perhaps 100s) of samples in parallel. “targets” kind of suggests that they using some kind of label. But 10,000 labels is a lot of labels… and it’s not clear how you’d create such a vast array of labels.

It would be easier if you had some kind of array… but I suspect this isn’t the case here.

The “Hypercoding” kind of suggests to me that they are incorporating multiple pieces of information from each probe. I could see them using a combination of factors (wavelength, fluorescence lifetime etc.) or perhaps incorporating binding kinetics. You could for example, imagine some kind of single molecule aptamer based platform where on/off binding helps identify the label type.

All of this is of course completely baseless speculation and with so little to go on is likely completely inaccurate. But baseless speculation can be fun…